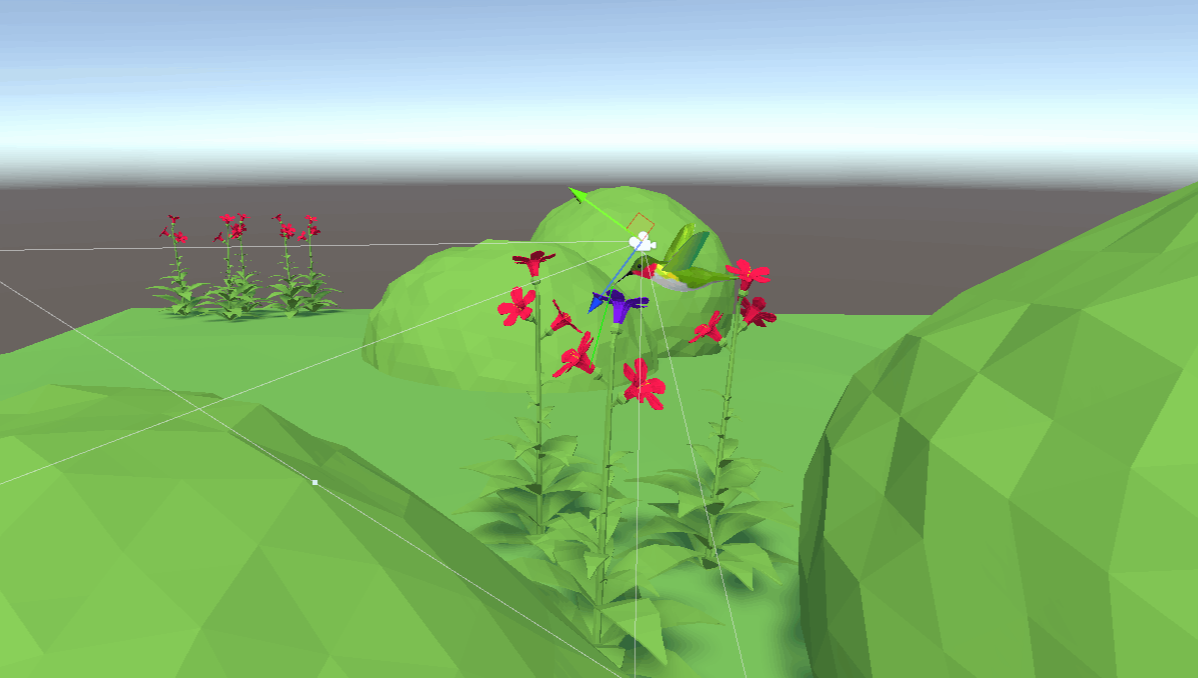

hummingbots learn to "see" using AI

For my dissertation "Behavioural Differences in Intelligent Virtual Agents With and Without Active Vision Systems", I trained a virtual "hummingbot" (hummingbird bot :) ) in a virtual environment to locate and "drink" nectar from flowers using only it's eyes (RGB camera, active vision) without any form of external input and then compared the behaviour to a hummingbot with access to location and orientation of the flowers and its own position relative to the environment along with other inputs provided.

Read the thesis here.